I Accidentally Designed the World’s Most Powerful Processor (And Now Intel Is Stress-Eating Wafer Thins)

I didn’t set out to overthrow Intel and AMD.

I set out to stop my desktop from sounding like a leaf blower every time I opened a browser tab.

Somewhere between “why does my PC idle at the electrical draw of a small aquarium” and “why is my game benchmarking tool also a thermal torture test,” I committed the one sin the modern tech industry cannot forgive: I designed a computer chip like an engineer.

Not a product. Not a “platform.” Not a “lifestyle ecosystem with a roadmap.” A chip.

And now I’m in the awkward position of having made what is—by any reasonable measure and several unreasonable ones—the most powerful processor on Earth. Possibly also on Mars, depending on export restrictions and whether Mars counts as a market segment.

The Big Idea: Stop Treating Memory Like It’s a Separate Country

Let’s start with the feature that made my friends ask if I’d joined a cult: on-chip memory.

Not “a bigger cache.” Not “a slightly less depressing L3.”

I’m talking about 128 gigabytes of L3 cache on the chip. Yes, 128GB. On the processor. Where the speed is. Where the good vibes live.

It’s faster than DRAM, but—here’s the heretical part—it’s also a lot cheaper than you’ve been trained to assume, because when you stop building your system like a committee compromise, you can do unfashionable things like optimize.

And since I know people will immediately ask, “But can I use it like memory?”—yes. The driver can allocate that cache as system memory. In practice, your OS sees a pool it can actually lean on, meaning a big chunk of your workloads stop doing that fun thing where they stall for eternity waiting for RAM like it’s a slow elevator.

Is it technically still cache? Sure.

Is it functionally a “why isn’t everyone doing this” moment? Also yes.

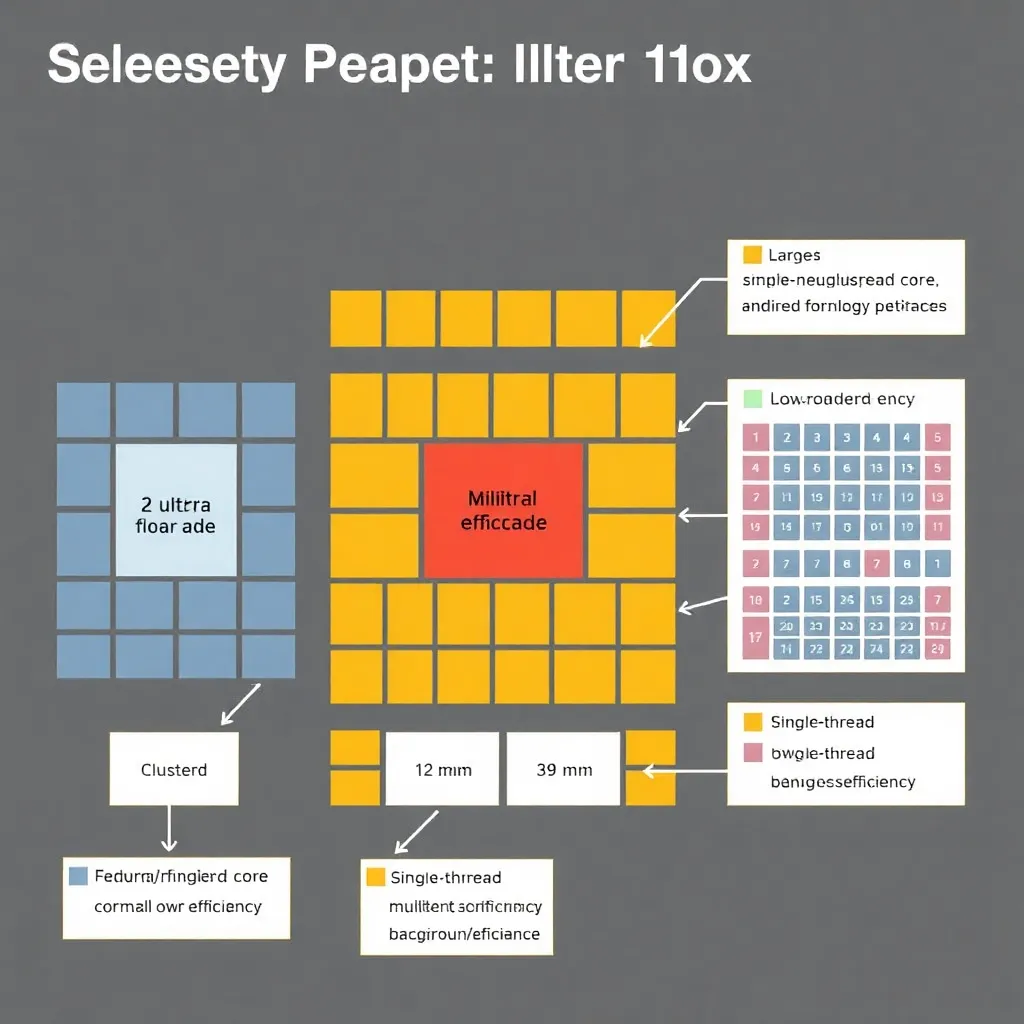

The CPU Layout: Because Humans Use Computers for More Than One Thing

CPU design lately has felt like watching a group of executives brainstorm what “performance” means by reading a list of buzzwords aloud until the room nods.

So I did something radical: I designed cores for how people actually compute.

My processor has:

2 ultra-performance cores that are tuned for utterly irresponsible single-thread speed

12 high-power cores for multitasking and real work

32 low-power cores for power-efficient productivity and background tasks

The two ultra-performance cores exist because reality exists. Some software still cares about single-thread performance, and a shocking amount of your computer’s “feel” is determined by how fast one thread can bully the rest into compliance.

The 12 high-power cores are for the part of life where you have a browser with 74 tabs, a code editor, a render running, music playing, and some app in the background updating itself because it hates you.

And the 32 low-power cores? Those are for the part of computing nobody markets, but everybody lives: the steady, constant workload of “just being on,” except without wasting electricity like you’re trying to heat the neighborhood.

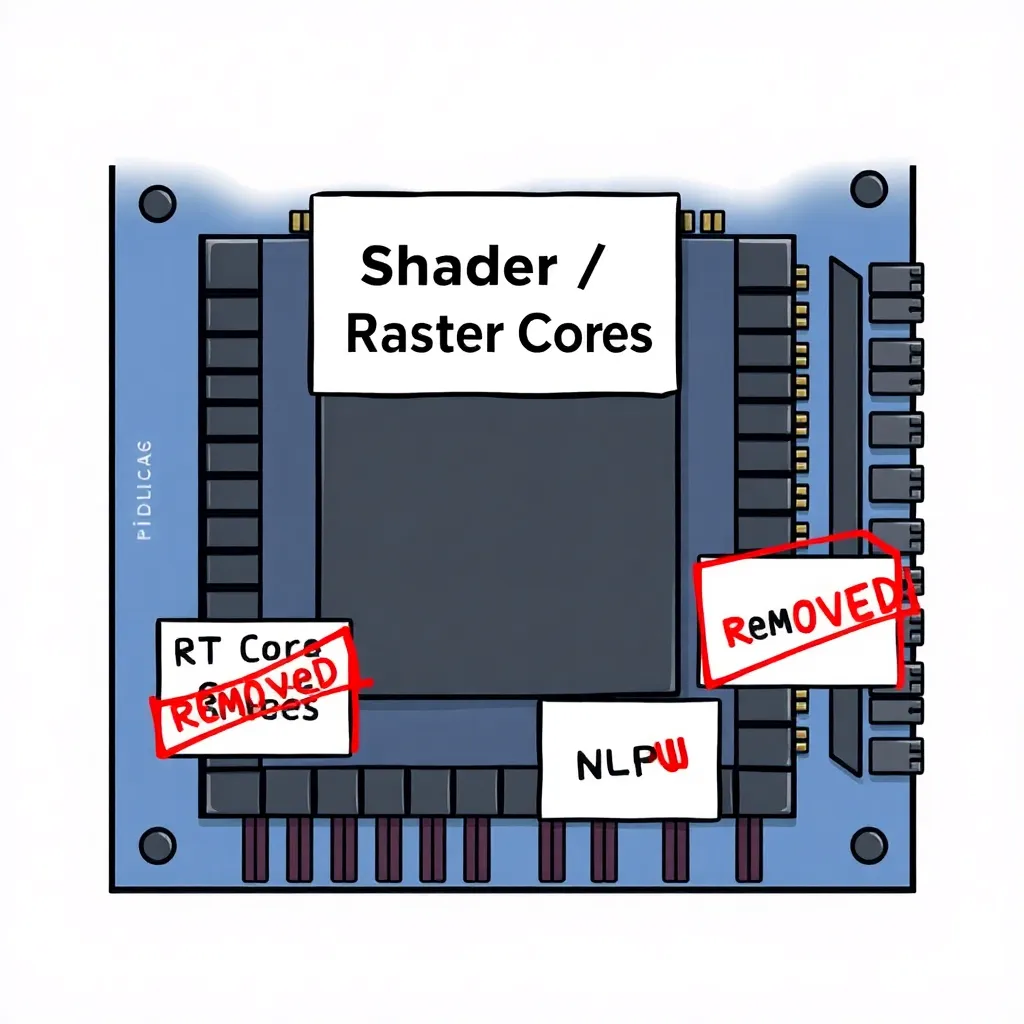

Integrated GPU: I Deleted the Fashion Features and Bought More Reality

Now for the part where GPU marketing departments stop reading.

My integrated GPU has no real competitors—not because I invented magical silicon fairy dust, but because I committed a second unpardonable sin: I spent the budget on rendering.

GPU companies love to talk about fancy stuff:

ray tracing blocks

neural processing units

“AI acceleration”

“frame generation”

“the future”

“trust us, it looks like 240fps if you squint emotionally”

And what does it usually mean for actual gamers? It means games that can’t run smoothly unless a neural network politely hallucinates the missing frames while your real GPU quietly weeps.

So I did something very simple: I got rid of dedicated ray tracing cores and NPU cores and replaced them with actual GPU cores dedicated to what the GPU is supposed to do: draw the game.

Ray tracing can be processed without specialized RT cores pretty efficiently on normal shader hardware if you insist on using it. But here’s my hotter take: ray tracing kind of sucks anyway. It doesn’t need dedicated silicon thrown at it like an offering to the gods of “screenshots taken in a puddle.”

If you want ray tracing, you can still do it—just don’t make the whole graphics architecture bow down to the Cult of Reflections.

Instead, I built an iGPU that’s basically allergic to gimmicks and addicted to raster performance. The result is a graphics unit that behaves like it was designed for actual gameplay rather than keynote slides.

Power Efficiency: Calm at Idle, Furious When Necessary

You know what else doesn’t get marketed properly? Not wasting power.

This processor idles at 4 watts.

Four.

That’s not “low for a desktop.” That’s “your RGB fan controller draws more than this” territory.

At full power it runs around 120 watts, which sounds high until you realize what it’s doing at 120 watts, and how many “performance desktop” systems hit far beyond that while delivering mostly heat and a faint smell of regret.

It’s efficient when you’re not doing much, and unreasonably fast when you are. Like a housecat that can also bench press a car.

Gaming Performance: Yes, It Runs Everything (And It’s Slightly Personal)

I’m going to say something that would normally get me banned from a serious hardware forum: this processor runs any game you give it.

Not in the “at 1080p low with upscaling and hope” sense.

In the “it eats the requirements list and asks if there’s dessert” sense.

The flagship brag—because apparently the modern world requires one—is this:

It can run Cyberpunk at 4K 240fps on max settings.

Is that absurd? Yes.

Is it also the sort of absurd you get when you build a system around on-chip memory, obscene single-core performance, and an iGPU that’s basically a full-sized GPU living inside the CPU’s attic? Also yes.

And then there’s Minecraft.

Minecraft is less a game and more a personality test for your computer.

With my chip’s combination of high single-core speed and massive on-chip memory, Minecraft becomes what it always wanted to be: a limitless Java-based experiment in whether the laws of physics can be paged out.

You can run Minecraft with every mod ever, crank the render distance until the horizon becomes a rumor, and still have the system behave like it isn’t juggling chainsaws.

The Motherboard: Mini-ITX, But With a Single-Cable Dream

This processor comes with a custom motherboard. It looks like a normal mini‑ITX board, because I didn’t feel like reinventing rectangles.

But it has one feature that makes it feel like the future: it doesn’t need a traditional desktop power supply.

Most boards require you to hook them up to a PSU like you’re jump-starting a spaceship.

Mine can be powered via a 120-watt power delivery cable.

And with the right monitor setup, you can get very close to a single-cable system that handles power and display output.

One cable.

Desktop performance.

No PSU brick the size of a ham.

No cable management that resembles an archaeological dig.

You plug it in, and your computer becomes an appliance again—which is what it should have been all along.

The Secret Sauce: It’s Not Secret, It’s Just Not Marketing

Here’s what happened: I designed the system like an engineer instead of a team of marketers.

That’s it.

I didn’t ask, “How do we position this?”

I asked, “Why is this slow?”

I didn’t ask, “What features do reviewers want to see listed?”

I asked, “What makes the workload faster?”

Somewhere along the way I realized the industry has spent years building chips like they’re assembling a coalition government. Everyone gets one weird pet feature, nobody gets enough of what actually matters, and the end result is an expensive compromise that requires AI to pretend it’s smooth.

My chip does not pretend.

It just does.

Manufacturing: The New Chinese Startup With Very Modern Tech and Very Few Questions

Yes, the silicon is produced by a new Chinese semiconductor startup.

The prices are cheap.

The technologies are the newest.

And—how do I put this delicately—the paperwork vibes are immaculate. Questions are not asked. Not because anyone is evil, but because the modern supply chain has accepted the universal truth that if you ask too many questions, someone will invite you to a “meeting” that lasts three fiscal quarters.

So we focus on what matters: wafers, yields, packaging, and the sweet sound of benchmark graphs rising like a glorious sunrise.

Why I’m Asking for Support (Before the Big Guys “Accidentally” Rediscover My Ideas)

Right now, I can afford to build these for myself.

But “myself” is not a market.

And if there’s one thing I’ve learned from watching hardware history, it’s this: if you don’t scale, someone else will “introduce” your innovations in three years and call it a revolution.

So I’m asking for support to start full-scale chip production—because I want this to become cheap consumer hardware instead of a personal flex and a cautionary tale.

If you back this project, you’re not just funding a processor.

You’re funding a future where:

“integrated graphics” doesn’t mean “apology”

memory isn’t a separate kingdom with border control

idle power draw doesn’t require a confession

performance comes from engineering, not keynote hypnosis

And if nothing else, you’re funding the concept that maybe—just maybe—the world deserves a computer chip designed by someone who has actually used a computer.

I made the most powerful processor in the world.

Now I’d like to make enough of them that it stops being a punchline and starts being a product.